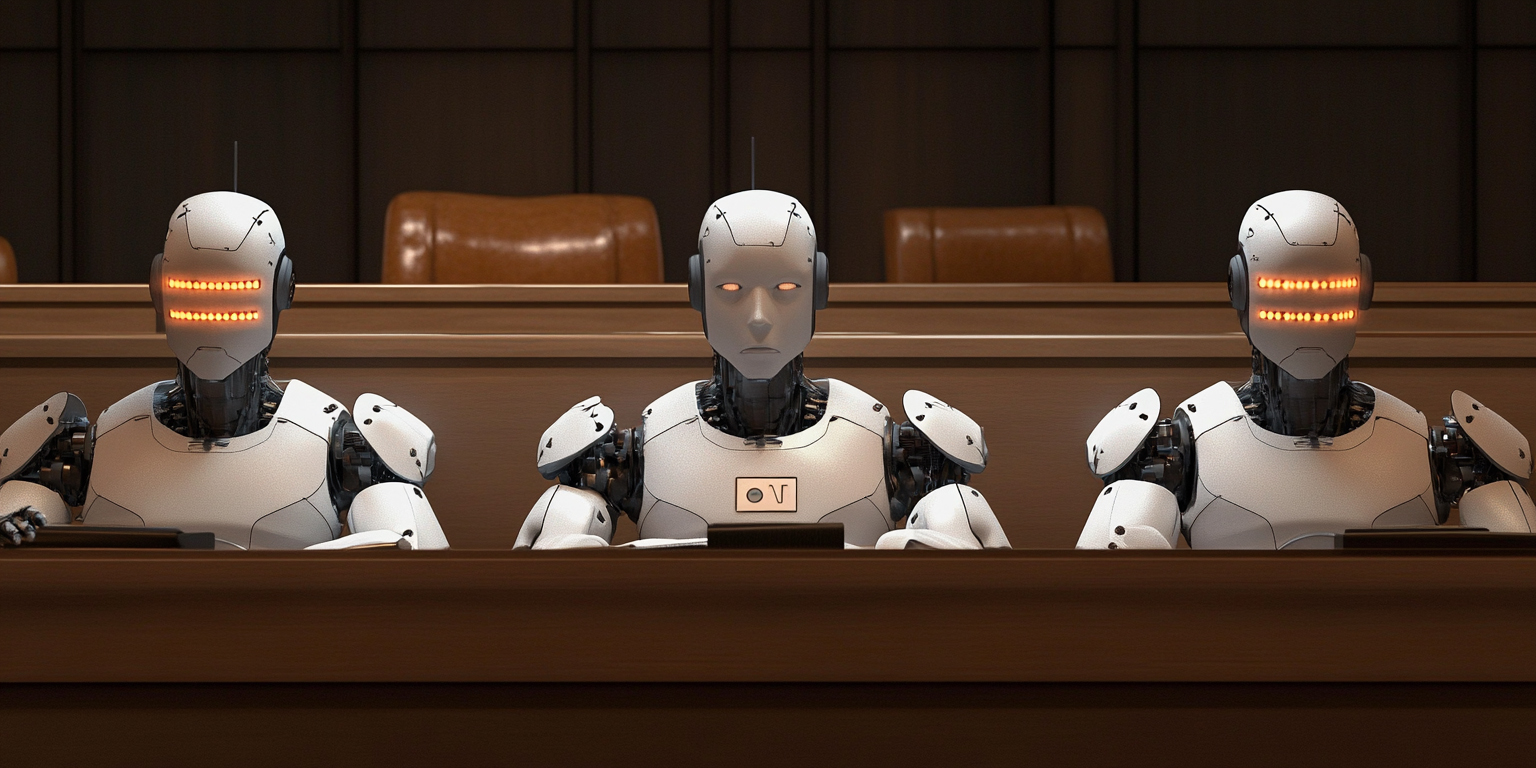

AI Regulation: Balancing Innovation and Safety in 2025

As artificial intelligence accelerates into 2025, the global conversation around its regulation has reached a fever pitch. The promise of AI—transforming industries, enhancing productivity, and solving complex problems—is undeniable, yet so are the risks: deepfake-driven misinformation, privacy breaches, and algorithmic bias threatening fairness. Governments, businesses, and citizens alike are grappling with a central question: how do we harness AI’s potential without unleashing chaos? In 2025, this tension is playing out on an international stage, with the European Union’s AI Act setting a bold benchmark, the United States wrestling with a fragmented approach, and emerging concerns like deepfake misuse pushing regulators to act. The stakes are high, and the debate over balancing innovation with safety is shaping the future of technology.

The EU AI Act: A Global Pace-Setter

The European Union has taken a commanding lead in AI regulation with its AI Act, which began rolling out key provisions in 2024 and continues to tighten its grip in 2025. Fully effective by August 2026, with some rules—like those governing general-purpose AI models—kicking in this year, the Act classifies AI systems by risk level, from “unacceptable” (banned outright) to “minimal” (lightly regulated). High-risk systems, such as those used in hiring or healthcare, face stringent requirements: transparency, robust data governance, and regular audits to prevent bias or harm. As of February 2025, bans on AI practices like social scoring or manipulative behavior have already taken effect, signaling Europe’s zero-tolerance stance on unethical uses.

The EU’s approach is proactive and comprehensive, aiming to foster trust while encouraging innovation. Companies deploying AI must now navigate a web of compliance, but the Act also offers sandboxes—controlled environments where startups can test AI systems—showing a commitment to growth alongside safety. Critics argue it’s too rigid, potentially stifling smaller firms with heavy-handed rules, yet supporters hail it as a model for the world. In 2025, the EU is doubling down, with the newly established AI Office working to enforce these standards, making Europe a testing ground for whether strict regulation can coexist with a thriving tech ecosystem.

The U.S.: A Patchwork in Progress

Across the Atlantic, the United States lags in federal AI legislation, leaving a patchwork of state-level efforts and agency actions to fill the gap in 2025. The Biden-era Executive Order on AI from 2023, which emphasized ethical development, was rescinded in January 2025 by the Trump administration, replaced with a deregulatory push under the “Removing Barriers to American Leadership in Artificial Intelligence” order. This shift prioritizes innovation and competitiveness over oversight, reflecting a belief that markets, not mandates, should shape AI’s future. Yet, without a unified federal framework, states like California and Colorado are stepping up. California’s flurry of AI bills in 2024—addressing deepfakes in elections and transparency in AI training data—carry into 2025, while Colorado’s AI Act, effective in 2026, targets algorithmic discrimination in high-stakes decisions.

Federal agencies like the Federal Trade Commission (FTC) are also active, cracking down on deceptive AI claims and privacy violations, as seen in recent enforcement against companies touting biased facial recognition tools. However, this fragmented approach creates uncertainty—businesses operating across state lines face a maze of rules, and gaps in oversight leave room for misuse. In 2025, the U.S. debate centers on whether light-touch policies will spark a tech boom or if the absence of cohesive regulation will let risks like deepfakes and data breaches spiral out of control.

Deepfakes, Privacy, and Bias: The Urgent Drivers

Three issues dominate the regulatory push in 2025: deepfakes, data privacy, and algorithmic bias. Deepfakes—AI-generated fake media—are no longer a niche threat. From election interference to personal fraud, their realism has lawmakers scrambling. California’s “Defending Democracy from Deepfake Deception Act” mandates platforms to label or remove such content during election seasons, a rule enforced rigorously in 2025. Globally, China’s deep synthesis laws, in place since 2023, set a precedent by regulating every stage of fake content creation, a model some nations are eyeing.

Data privacy, meanwhile, is a flashpoint as AI systems gobble up personal information to train models. The EU’s General Data Protection Regulation (GDPR) intersects with the AI Act in 2025, demanding privacy-preserving measures for high-risk AI, while U.S. consumers rely on scattershot state laws like California’s Privacy Rights Act. Algorithmic bias rounds out the trio, with AI systems in hiring, lending, and policing under scrutiny for perpetuating inequities. Colorado’s 2025 audits and New York City’s employment tool regulations highlight a growing consensus: unchecked AI can amplify human flaws, and regulation must intervene.

Innovation vs. Safety: The Tightrope Walk

The core challenge of 2025 is striking a balance. Too little regulation, and society risks a flood of misinformation, eroded privacy, and unfair systems—think deepfakes swaying elections or AI denying loans based on skewed data. Too much, and innovation could stall, with startups crushed by compliance costs and tech giants fleeing to less restrictive regions. The EU bets on structured guardrails, believing safety breeds trust and, in turn, adoption. The U.S. leans on agility, hoping a hands-off stance keeps it ahead of rivals like China, where AI regulation blends safety with state control.

Businesses feel the squeeze either way. A 2025 EY report notes that 25% of enterprises plan AI regulation pilots this year, up from near-zero in 2023, as compliance becomes a competitive edge. Yet, tech leaders warn that overregulation could cede ground to less scrupulous players. The solution, some argue, lies in global cooperation—aligning standards to avoid a regulatory race to the bottom—but 2025 shows little progress here, with transatlantic talks via the EU-U.S. Trade and Technology Council stalling over divergent priorities.

What’s Next for 2025?

As the year unfolds, AI regulation remains a moving target. The EU’s AI Act will hit its stride, with general-purpose AI rules—think models like ChatGPT—fully enforceable by August, forcing developers to disclose training data and mitigate risks. In the U.S., expect more state-level action, especially in Democratic strongholds, countering federal deregulation. Globally, nations like Japan and Singapore are crafting lighter frameworks, watching the EU and U.S. for lessons. Deepfake crackdowns will intensify, privacy battles will escalate, and bias audits will become standard for responsible firms.

The conversation in 2025 isn’t just about rules—it’s about values. Can AI be both a tool for progress and a safeguard for society? The answer hinges on whether regulators, innovators, and the public can find common ground. For now, the tightrope walk continues, with every step watched by a world eager for breakthroughs but wary of the fallout.